5 releases

| 0.1.4 | Oct 14, 2022 |

|---|---|

| 0.1.3 | Oct 14, 2022 |

| 0.1.2 | Oct 14, 2022 |

| 0.1.1 | Oct 14, 2022 |

| 0.1.0 | Oct 14, 2022 |

#705 in Machine learning

2MB

709 lines

Neural-Network

A simple neural network written in rust.

About

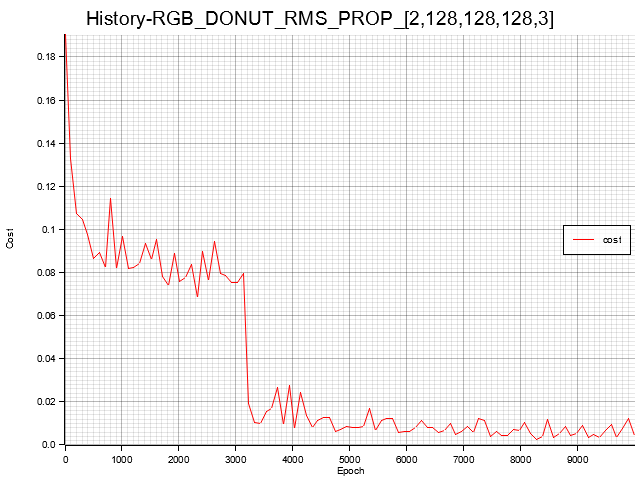

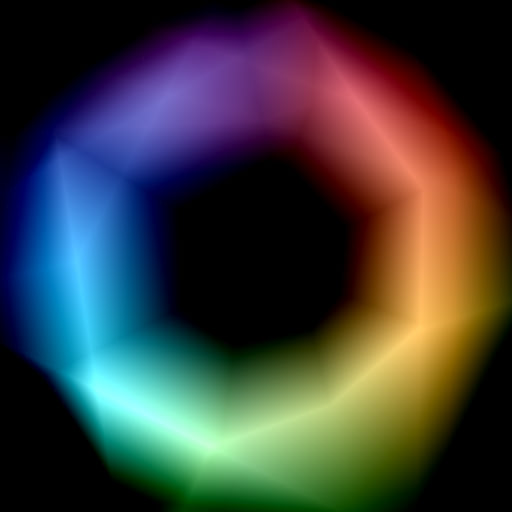

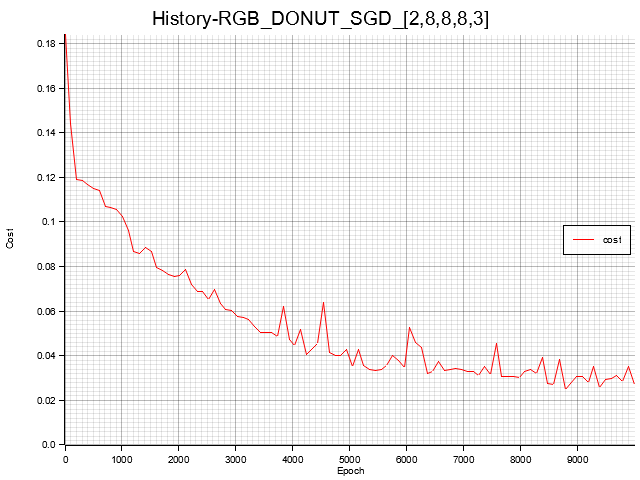

This implementation of a neural network using gradient-descent is completely written from ground up using rust. It is possible to specify the shape of the network, as well as the learning-rate of the network. Additionally, you can choose from one of many predefined datasets, for example the XOR- and CIRCLE Datasets, which represent the relative functions inside the union-square. As well as more complicated datasets like the RGB_DONUT, which represents a donut-like shape with a rainbow like color transition.

Below, you can see a training process, where the network is trying to learn the color-values of the RGB_DONUT dataset.

Features

The following features are currently implemented:

- Optimizers

- Adam

- RMSProp

- SGD

- Loss Functions

- Quadratic

- Activation Functions

- Sigmoid

- ReLU

- Layers

- Dense

- Plotting

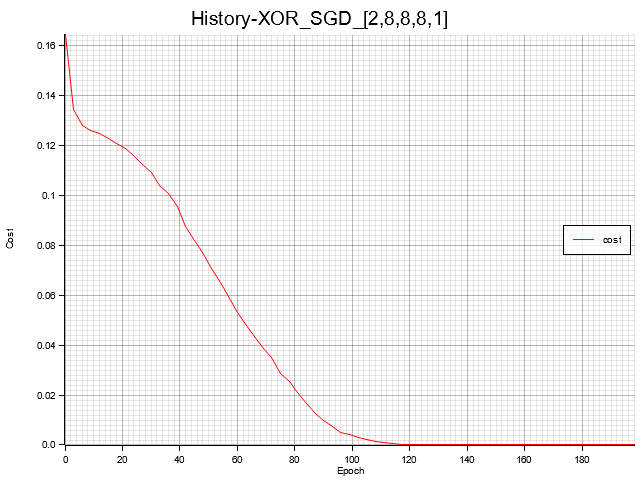

- Plotting the cost-history during training

- Plotting the final predictions inside, either in grayscale or RGB

Usage

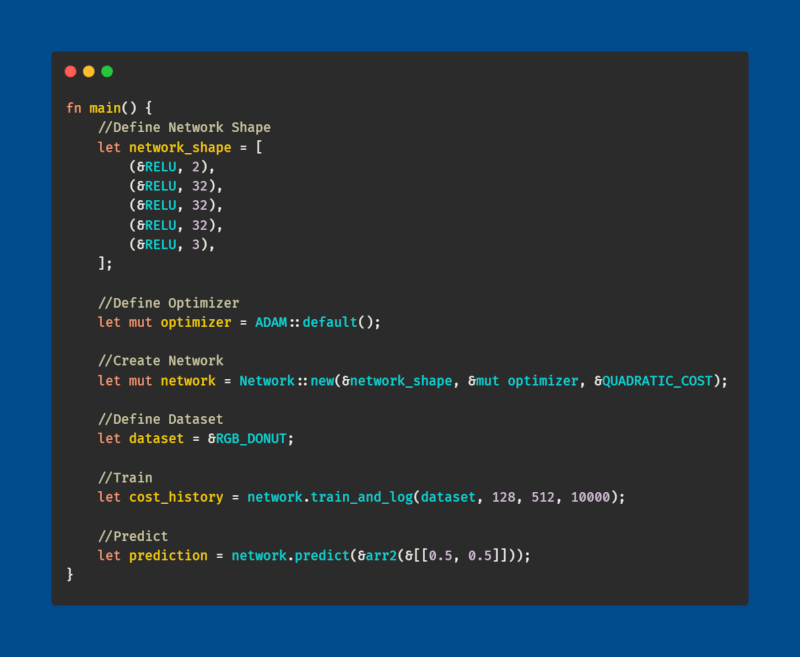

The process of creating and training the neural network is pretty straightforwards:

Example Training Process

Below, you can see how the network learns:

Learning Animation

Final Result

Cool training results

RGB_DONUT

Big Network

Small Network

XOR_PROBLEM

Dependencies

~9MB

~161K SLoC