3 unstable releases

| new 0.1.2 | Apr 5, 2025 |

|---|---|

| 0.1.0 | Mar 28, 2025 |

| 0.0.1 | Mar 28, 2025 |

#1212 in Text processing

352 downloads per month

27KB

410 lines

🪙 toktkn

toktkn is a BPE tokenizer implemented in rust and exposed in python using pyo3 bindings.

from toktkn import BPETokenizer, TokenizerConfig

# create new tokenizer

config = TokenizerConfig(vocab_size: 10)

bpe = BPETokenizer(config)

# build encoding rules on some corpus

bpe.train("some really interesting training data here...")

text = "rust is pretty fun 🦀"

assert bpe.decode(bpe.encode(text)) == text

# serialize to disk

bpe.save_pretrained("tokenizer.json")

del(bpe)

bpe = BPETokenizer.from_pretrained("tokenizer.json")

assert(len(bpe)==10)

Install

Install toktkn from PyPI with the following

pip install toktkn

Note: if you want to build from source make sure cargo is installed!

Performance

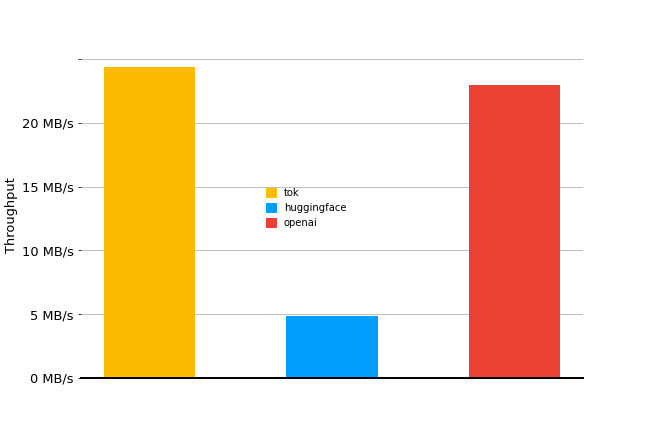

slightly faster than openai & a lot quicker than 🤗!

Performance measured on 2.5MB from the wikitext test split using openai's tiktoken gpt2 tokenizer with tiktoken==0.6.0 and the implementation from 🤗 tokenizers at tokenizers==0.19.1

Dependencies

~7–19MB

~212K SLoC