4 releases (2 breaking)

| 0.3.1 | Nov 20, 2023 |

|---|---|

| 0.3.0 | Nov 20, 2023 |

| 0.2.0 | Nov 15, 2023 |

| 0.1.0 | Nov 11, 2023 |

#305 in Compression

181 downloads per month

21KB

248 lines

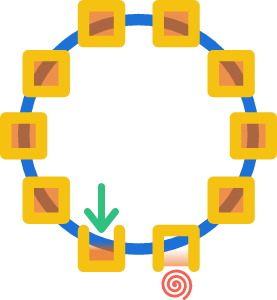

Rolling log files with compression

This library offers the struct LogFileInitializer to get a log file inside a logs directory.

It uses a rolling strategy that works like the wheel image above 🎡

The initializer has the fields directory, filename, max_n_old_files and preferred_max_file_size_mib.

Rolling will be applied to the file directory/file if all the following conditions are true:

- The file already exists.

- The file has a size >=

preferred_max_file_size_mib(in MiB). - No rolling was already done today.

In the case of rolling, the file will be compressed with GZIP to directory/filename-YYYYMMDD.gz with today's date (in UTC).

If rolling was applied and the number of old files exceeds max_n_old_files, the oldest file will be deleted.

It is important to know that rolling happens only on initialization.

The init method returns a normal File.

It will not apply rolling if your program runs for multiple days without being restarted.

This has the following advantages:

- No overhead on writing to check when to roll.

- No latency spikes during the actual rolling.

- The whole logs of one run (from start to termination) are inside one file.

Your program should restart anyway every couple of days when restart the host after a system update.

But if you really want rolling every fixed amount of days while your program is running,

you can use your own type that calls the init method under the hood when rolling should happen and then swap the file.

For more details, read the documentation of the LogFileInitializer struct

and its init method.

Example

We will see what happens when calling the init method

with the following field values for the initializer:

use logs_wheel::LogFileInitializer;

let log_file = LogFileInitializer {

directory: "logs",

filename: "test",

max_n_old_files: 2,

preferred_max_file_size_mib: 1,

}.init()?;

Ok::<(), std::io::Error>(())

This method call will always return the file logs/test at the end.

But we will discuss its side effects.

First call

The first call will create the directory logs/ in the current directory (because we specified a relative path) with the file test inside it.

Content of logs/:

test

Later call

If we call the same function again on the date 2023-11-12 (in UTC) and the size of the file logs/test is bigger than 1 MiB,

the file will be compressed to logs/test-20231112.gz.

The file logs/test will be returned after truncation (empty file).

Content of logs/:

testtest-20231112.gz

Call on the same day

If we call the same function again on the same day, nothing will change,

even when the size of logs/test is bigger than 1 MiB.

The file logs/test will be open in append mode.

Content of logs/:

testtest-20231112.gz

Call on a later day

If we call the same function again on the next day and the size of the file logs/test is bigger than 1 MiB,

the file will be compressed to logs/test-20231113.gz.

The file logs/test will be returned after truncation.

Content of logs/:

testtest-20231113.gztest-20231112.gz

Call on a later day with an exceeded number of old files

Now, we already have 2 old files which means that we reached the limit max_n_old_files.

If we call the same function again on the next day and the size of the file logs/test is bigger than 1 MiB,

the file will be compressed to logs/test-20231114.gz.

The oldest file test-20231112.gz will be deleted.

The file logs/test will be returned after truncation.

Content of logs/:

testtest-20231114.gztest-20231113.gz

Tracing Subscriber

You can use this library with the tracing ecosystem!

Here is an example of how to use the returned file as a tracing subscriber:

use logs_wheel::LogFileInitializer;

use std::sync::Mutex;

let log_file = LogFileInitializer {

directory: "logs",

filename: "test",

max_n_old_files: 2,

preferred_max_file_size_mib: 1,

}.init()?;

let writer = Mutex::new(log_file);

let subscriber = tracing_subscriber::fmt()

.with_writer(writer)

// … (other `SubscriberBuilder` methods)

.finish();

# Ok::<(), std::io::Error>(())

Similar crates

- tracing-appender: Offers RollingFileAppender which rolls every fixed amount of time while the program is running. But it doesn't compress old log files and doesn't delete any.

logs-wheelcan be used as an alternative. It can also be used in combination with NonBlocking for non blocking writes. - rolling-file: Provides rolling every fixed amount of time while the program is running. No compression. Has to rename every file during the rolling because they are numbered.

Dependencies

~1MB

~20K SLoC